The Ever-Evolving World of AI: Future Directions and Recent Trends in Computer Science

The field of computer science is ever-evolving and constantly advancing, with new trends emerging every day. Among the most notable trends in recent years are those related to artificial intelligence (AI), which has witnessed unprecedented growth and become an integral part of many industries. In this article, we will explore some of the most critical trends in computer science and how they are related to the current state of AI as a field that aims to create intelligent machines capable of simulating human reasoning and cognitive abilities. We will look into the development of machine learning algorithms, natural language processing systems, and computer vision technologies, as well as the integration of these tools into various industries and their impact on society as a whole.

Join us on our in-depth exploration of the future trends that are likely to shape the field of AI in the coming years like advancements in quantum computing, the increasing use of explainable AI, and the growing importance of ethical considerations in AI development.

A History and Overview of Key Concepts and Advancements

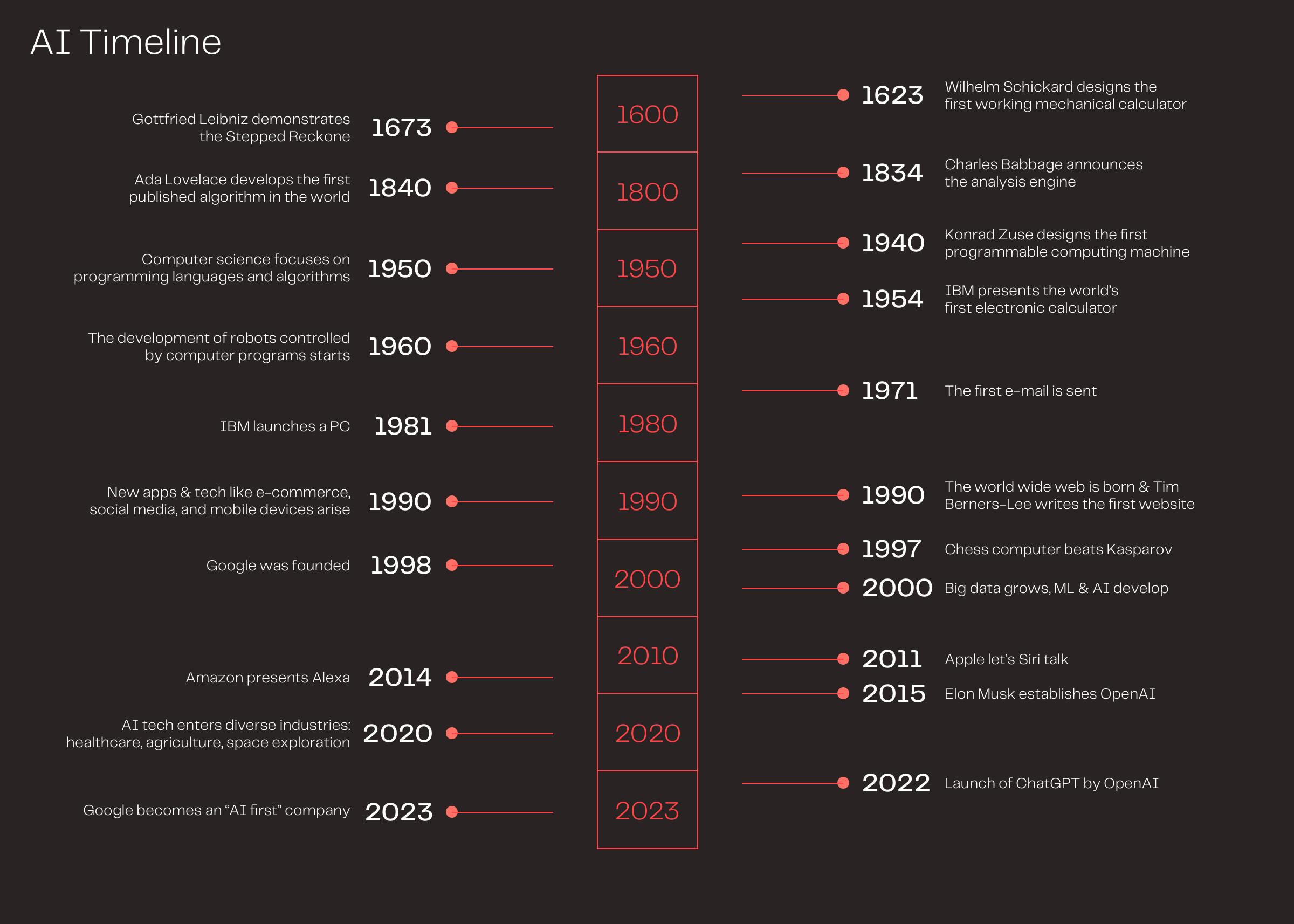

Computer science is an interdisciplinary field that encompasses the study of computation, information, and automation. The field is both theoretical and applied, including algorithms, theory of computation, and information theory, as well as hardware and software design and implementation. It has a long history dating back to the development of machines for calculating fixed numerical tasks such as the abacus. The central concept in computer science is algorithms and data structures.

The history of computer science dates back to the development of early computing machines. Wilhelm Schickard designed the first working mechanical calculator in 1623, and in 1673, Gottfried Leibniz demonstrated a digital mechanical calculator called the Stepped Reckoner. Leibniz also documented the binary number system, making him the first computer scientist and information theorist.

Charles Babbage and Ada Lovelace are two important figures in the history of computing. Babbage's work on the Difference Engine and the Analytical Engine laid the foundation for modern computing, while Lovelace's recognition of the potential of the Analytical Engine and her development of the first published algorithm helped shape the field of computer science.

In the 1940s, the first digital computers were developed. These early computers were massive, room-sized machines that required specialized expertise to operate. In the 1950s, computer science emerged as a discipline focused on developing programming languages and algorithms to make these machines more accessible to a wider range of people.

In the 1960s and 1970s, researchers began developing robots that could be controlled by computer programs, which enabled more precise control and automation of tasks. It did not take long until the first industrial robots revolutionized manufacturing by automating assembly line tasks.

Trends in computer science evolved rapidly

The development of the Internet in the 1990s led to the emergence of new applications and technologies, such as e-commerce, social media, and mobile devices. This period also saw the rise of computer networking and the development of new programming languages and frameworks, such as Java and Python.

The integration of computer vision and machine learning technologies in the 1980s and 1990s allowed for the development of more sophisticated robots capable of sensing and adapting to their environment. The result was mobile robots that can navigate through complex environments, such as those found in warehouses and hospitals.

From Trends in Computer Science to Data Science: The Rise of AI and Big Data

In the early 2000s, the field of computer science began to shift towards data science and artificial intelligence. The growth of big data and the development of machine learning algorithms led to the emergence of new applications and technologies, such as predictive analytics, natural language processing, and computer vision.

Today, the integration of artificial intelligence (AI) technology into different technologies is driving the development of more advanced technologies that can operate autonomously and perform tasks that were previously impossible. AI-powered technologies are being used in a wide range of industries, from healthcare to agriculture to space exploration. This technology has also enabled the collection of large amounts of data, which is crucial for the development and training of AI algorithms. For example, autonomous vehicles are equipped with sensors that collect vast amounts of data about the environment, which is used to train AI algorithms to recognize and respond to different driving scenarios.

The Building Blocks of AI: How Previous Research Shaped the Foundation of Artificial Intelligence

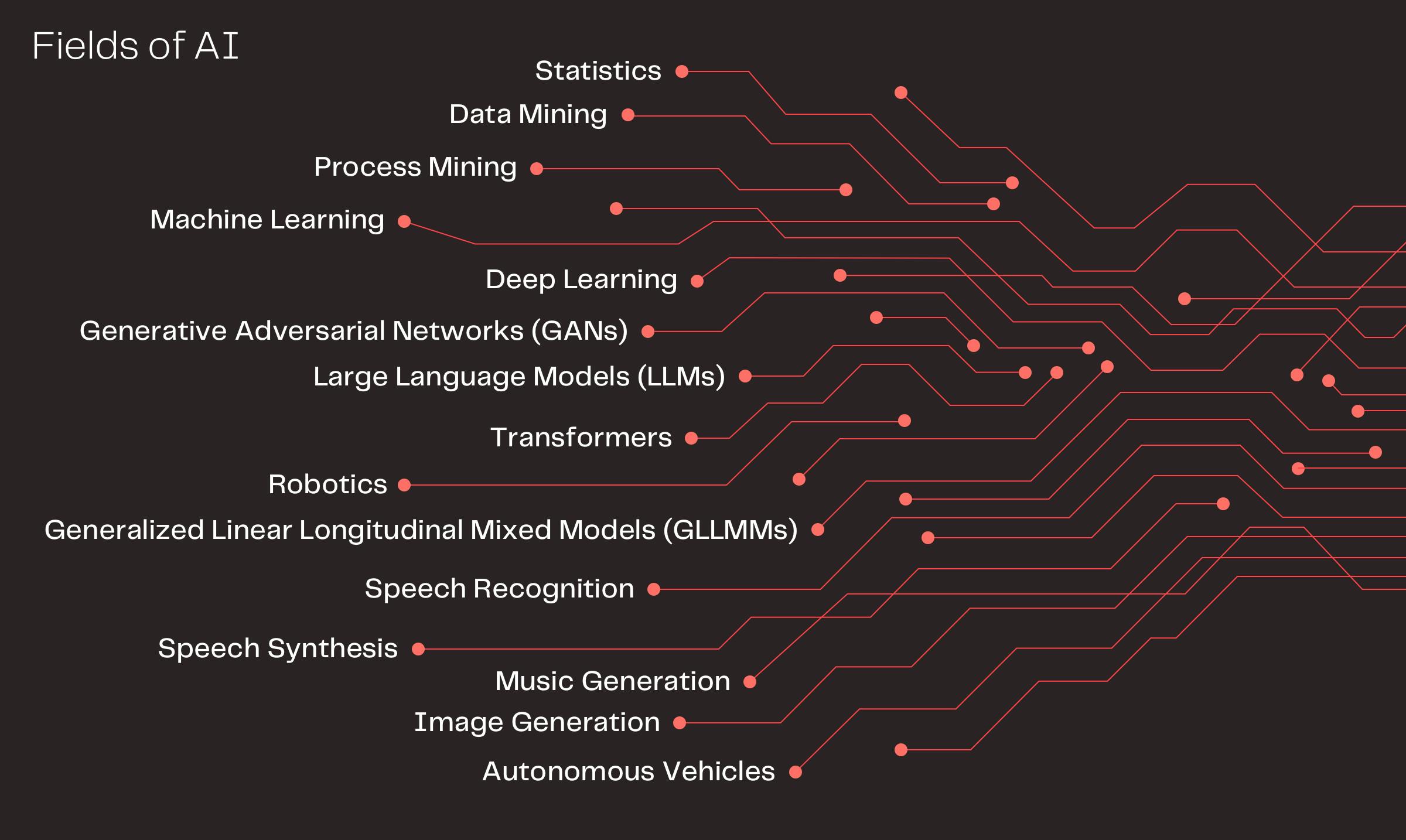

Previous research in computer vision, speech recognition, speech synthesis, robotics, music generation, image generation, and autonomous vehicles has laid the foundation for the current state of AI. These advancements have enabled machines to understand and interpret human language, recognize patterns in images, and perform complex tasks autonomously. The foundation of Artificial Intelligence (AI) is based on various fields of study, including mathematics, statistics, computer science, engineering, linguistics, and psychology. Let’s have a look at the most important fields.

Statistics

Though optimizing production processes is an evergreen topic in the industry, it should not be missed in our recap of the Fair. More and more companies aim at improving efficiency, reducing costs, and minimizing environmental impact. Industry experts shared insights on how companies can implement these solutions effectively to achieve their production goals.

Data mining

With the exponential growth of data in recent years, data mining has become an essential tool for extracting insights from vast amounts of information. It is a process of discovering patterns and relationships in large datasets. Machine learning algorithms, such as decision trees, support vector machines, and neural networks, are used extensively in data mining to identify patterns and relationships in data. This information helps businesses to better understand their customers and make data-driven decisions.

Process mining

It is a technique for analyzing business processes based on event data. It involves extracting event logs from various systems and then identifying process flows, bottlenecks, and inefficiencies from these logs. Process mining techniques can be combined with machine learning algorithms to automatically identify patterns and anomalies in process data. Both are important in order to improve processes and optimize operations.

Machine learning

Enables computer systems to learn and improve from experience without being explicitly programmed. In traditional programming, a human developer writes code that tells a computer exactly what to do. However, in machine learning, a computer program can be trained on a dataset, and it will automatically learn patterns and relationships from the data. This allows machines to make decisions, identify patterns, and make predictions without being explicitly programmed for each specific task.

Deep learning

This subfield of machine learning involves training artificial neural networks to recognize patterns in data. Neural networks are a type of machine learning model inspired by the structure and function of the human brain. Deep learning algorithms can process large amounts of complex data, such as images or audio, and identify patterns that humans might not be able to detect.

Generative Adversarial Networks (GANs)

GANs are a type of deep-learning neural network that consists of two parts: a generator and a discriminator. The generator creates new data samples that resemble the original dataset, while the discriminator evaluates the generated data samples to determine whether they are real or fake. The generator and discriminator are trained together in a game-like manner, with the generator trying to produce samples that the discriminator cannot distinguish from the original dataset. GANs have been used to generate realistic images, music, and even 3D models.

Transformers

It is a type of deep learning architecture that allows machines to process sequences of data, such as text, more efficiently. They were first introduced in 2017 by researchers at Google and have since become a popular tool in natural language processing (NLP). Traditional neural networks process data sequentially, which can be slow and inefficient for long sequences of data. Transformers, on the other hand, can process the entire sequence of data in parallel, making them much faster and more efficient. They have been used to develop state-of-the-art language models, such as GPT-3, which can generate human-like language and perform complex natural language tasks, such as translation and summarization.

Large Language Models (LLMs)

Recently, there has been significant interest in Large Language Models (LLMs) and Generalized Linear Longitudinal Mixed Models (GLLMMs), also known as Gollum AIs. These models are becoming increasingly popular as they enable machines to understand and generate human-like language, leading to advancements in natural language processing. ChatGPT is one such example of an LLM that has gained prominence in recent years.

The field of artificial intelligence is moving so rapidly that before we have even got our hands fully on one, another one arrives. This is the case with GPT. It was only in November 2022 that GPT-3.5, on which ChatGPT is based, was released. And recently on March 2023, we have seen GPT-4. The new version, GPT-4, has enhanced safety and privacy measures, along with the ability to process longer input and output text, and deliver more precise and detailed responses to complex inquiries.

Bias, Deepfakes and AGI: The Challenges and Impact of AI Across Industries and Society

AI has become ubiquitous and is being used across industries and fields to enhance efficiency, productivity, and accuracy. However, with its numerous benefits come several challenges that need to be addressed.

One of the biggest challenges is the issue of bias and fairness in AI. Since AI is only as good as the data it's trained on, if the data is biased, the AI will be biased as well. This can have serious implications, particularly in fields such as healthcare and finance, where biased algorithms can lead to unfair decisions.

Another area where AI is making a significant impact is AI art, which involves using AI to create new and innovative artworks. While this has led to some fascinating works of art, it has also raised questions about the role of the artist and the creative process.

We have also seen some examples of deepfakes that pose a danger. They involve using AI to manipulate audio and video recordings to create fake content. This can have serious consequences, particularly in politics, where deepfakes can be used to spread false information and influence public opinion.

AI is also automating jobs, which is leading to concerns about unemployment and the need for re-skilling the workforce. However, AI is also creating new job opportunities in areas such as AI research and development.

Artificial general intelligence, or AGI, is another area of AI that has garnered attention. AGI refers to machines that have human-like intelligence and can perform a wide range of tasks without being explicitly programmed. While we're still far from achieving AGI, it's a topic of active research and debate.

AI is also being used to decode dreams through Electroencephalography (EEG data), which involves analyzing brain activity to determine what people are dreaming about. While this has the potential to improve our understanding of the human brain, it also raises concerns about privacy and the use of personal data.

The Power of AI: Exploring Prominent Use Cases and Innovations in Recent Years

Some of the prominent examples of AI use cases that have gained popularity in recent years include Cambridge Analytica, which used AI to influence political campaigns, Snapchat filters, which use AI to create augmented reality experiences, and recommendation engines such as Spotify, Netflix, and Amazon, which use AI to personalize content recommendations for users. AI-powered language models such as ChatGPT and image generation models such as DaLLE are also examples of how AI is advancing the fields of natural language processing and computer vision.

Future trends in Computer Science and AI

As AI continues to advance, researchers and experts are increasingly focused on addressing challenges and ensuring that AI is developed and deployed in an ethical, responsible, and sustainable manner.

One important area of research is explanatory AI, or developing AI systems that are transparent and can explain their decision-making processes. This is crucial for ensuring that AI is trustworthy and accountable, and can help to mitigate concerns around bias and unfairness in AI systems.

Speaking of bias, it is a significant challenge in AI and requires continued attention. AI systems can inherit and amplify biases from their data sources, resulting in unfair or discriminatory outcomes. To address this, researchers are developing techniques such as data preprocessing, bias detection and mitigation, and diversity-aware learning to ensure that AI systems are fair and unbiased.

Last but not least, sustainability is another crucial area of focus for AI research. The energy consumption and carbon footprint of AI systems can be significant, and researchers are working to develop more efficient and sustainable AI architectures and algorithms.

From the Niche to Mainstream: AI for all

While the use of AI was limited to a specific group of professional users for a long time, the focus is now on democratizing AI by making it accessible to a wider range of people and organizations. User-friendly tools and platforms allow non-experts to use and benefit from AI technology.

Google is leading the way: At its annual developer conference Google I/O in May 2023, the company presented its news and positioned itself clearly as an “AI-first” company. Artificial Intelligence will be integrated into all of Google’s products and services, making it unnecessary to create AI prompts on a single webpage when every user can play around with the technology in Search, Google Workspace, Maps, Photos, Gmail, and other applications. "Making AI helpful for everyone" - Google's headline promises a lot - and it will deliver.

Towards Humanistic AI: Embracing Collaboration and Empathy for the Future of Artificial Intelligence

Already today, AI is impacting human emotions and relationships, particularly in the context of loneliness and social isolation. While AI-powered virtual assistants and chatbots can provide companionship and support, they also raise questions about the nature of human connection and the potential for emotional manipulation.

If we dare to look ahead to the ongoing development, the focus will shift to making AI more humanistic, i.e. AI should be developed with the purpose of augmenting and supporting human capabilities rather than replacing them. This includes designing AI systems that are empathetic, cooperative and able to interact with humans in a natural and intuitive way.

Getting there will require continuous research and innovation. But it is the only way we can adress these challenges and ensure that AI is developed and used in a way that benefits everyone.